Potable Water Reuse Report

Published by the University of Southern California ReWater Center in collaboration with Trussell

Series 2, Issue 1

17 December 2024

Pathogen Reduction Crediting: Its not what you can remove, it's what you can prove

Key Takeaways

- Potable water reuse requires much higher pathogen reduction than conventional drinking water treatment.

- As with drinking water treatment, potable reuse is regulated by comparing the log10 reduction value (LRV) requirements for pathogens with the LRV credits that can be achieved by the individual treatment processes in the facility.

- This LRV crediting approach is used because we are technically incapable of measuring pathogens in treated water down to the very low levels needed.

- Frameworks exist to assign pathogen LRV credits for some treatment processes; many other processes remain under-credited or entirely uncredited.

- To improve the efficiency and economics of potable reuse, existing crediting frameworks need to be expanded for under-credited processes and new frameworks need to be created for uncredited processes.

Introduction

The first series of the Potable Water Reuse Report focused on the factors shaping direct potable reuse (DPR) regulations across the globe. One constant across all locations—regardless of regulatory development—is the high level of pathogen control required to protect public health. This second series turns its focus to the importance of pathogen crediting frameworks, which are vital to ensure treatment systems actually achieve sufficient pathogen control to protect public health.

Why bother with crediting frameworks? Can’t we just test the treated water to prove that it’s ready to drink? The short answer is no: we are technically incapable of measuring waterborne pathogens down to the very low levels needed for drinking water. Consequently, a different approach was developed that does not rely on monitoring the treated water. Instead, it defines the level of treatment needed to reduce the very high level of pathogens in wastewater down to the very low levels needed for drinking water. Rather than analyzing the treated water, potable reuse plants must demonstrate that the treatment they provide meets or exceeds minimum treatment levels. One corollary of this approach, however, is that it relies on frameworks to quantify how much credit each treatment process in a treatment train should receive.

The United States Environmental Protection Agency (U.S. EPA) created several crediting frameworks to support their surface water treatment regulations in the 1980s and 1990s. Surface waters have far fewer pathogens than wastewater, so pathogen reduction requirements for systems treating surface waters are lower than those for potable reuse systems. Therefore, in the existing frameworks, the maximum credits assigned for each treatment process were often capped at lower levels than the processes could actually achieve. When considering the use of the same treatment processes in a potable water reuse facility, crediting them to their full potential could lower the cost of treatment and avoid overdesign.

In this series, we address the need to optimize, revise, and create new pathogen reduction crediting frameworks for potable reuse. This first issue provides an overview of pathogen crediting, summarizes frameworks currently in use, and explains the need for modification of existing frameworks and the development of new frameworks for potable reuse applications.

1) Why we need crediting frameworks

Ideally, we could stick a probe into a glass of water and confirm that it’s ready to drink. Unfortunately, we cannot for several reasons: 1) there are hundreds of different types of pathogens in wastewater and it is impossible to monitor them all; 2) the presence of even a single pathogen may be enough to cause an infection; and 3) lab methods are not sensitive enough to confirm the presence of pathogens at the low levels needed for drinking water.

There are hundreds of different pathogens with different susceptibilities to treatment

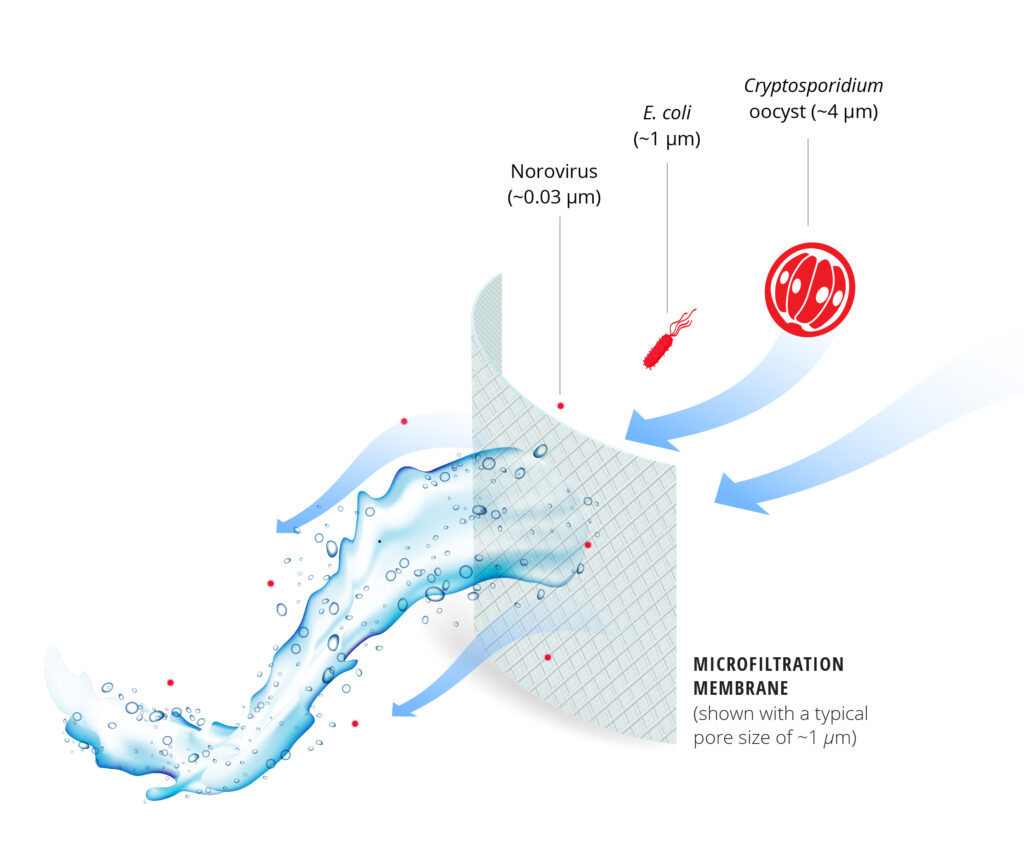

Wastewater contains hundreds of different pathogens that cause a wide variety of diseases, from diarrhea and dysentery to typhoid fever, hepatitis, and even chronic illnesses like heart disease, liver disease, and cancer. The four major groups of pathogens are viruses, bacteria, protozoa (such as Giardia cysts and Cryptosporidium oocysts), and the eggs of parasitic worms (helminths). Each has unique characteristics that determine its susceptibility to treatment or removal. For example, Cryptosporidium oocysts are resistant to chlorine but can be removed by filtration because of their relatively large size. Viruses, on the other hand, are susceptible to chlorine, but are not as well-removed by filtration because of their small size (Fig. 1).

Rather than considering every pathogen potentially present, drinking water and potable reuse regulations focus on a small number of reference pathogens. Typically, pathogens that exhibit a high resistance to treatment and disinfection are selected as reference pathogens. While the World Health Organization (WHO) previously developed guidelines for pathogen control based on indicator bacteria, it now recommends developing regulations based on microbial risks from reference pathogens. Globally, water reuse regulations based on reference pathogens tend to use similar pathogen groups: protozoa such as Cryptosporidium oocysts (Australia, European Union, U.S.), bacteria such as Campylobacter (Australia, European Union), and viruses including norovirus, rotavirus, and adenovirus (Australia, European Union, U.S.).

Pathogens are present at high concentrations in wastewater; as few as one may cause an infection

Because pathogens are excreted in high numbers, their concentrations in wastewater are also very high. This poses a great challenge for potable reuse treatment—to achieve very high levels of pathogen reduction. Consider the following: there are 100 billion noroviruses in just one gram of feces, but the ingestion of even a single norovirus may be sufficient to cause an infection in some people. Because of this, we need to provide very high levels of treatment to reduce pathogen concentrations down to the very low levels needed for drinking water. In fact, pathogen reduction requirements are so high that it is not practical to show them as percentages. Instead we use log10 notation, where a 1-log10 reduction is the same as a 90% reduction (see this article for a detailed discussion). The 12-log10 virus reduction that isrequired in several U.S. potable reuse regulations is equivalent to removing 99.9999999999% of the viruses in water.

Pathogens can’t be detected at low enough levels

While laboratory methods are capable of concentrating and quantifying pathogens in samples as large as 1,000 liters, this volume is nowhere near what would be required to demonstrate compliance with regulations (Fig. 2). Method sensitivity would need to be increased a thousand-fold (or more) to confirm the levels specified by regulations. Furthermore, processing a sample of 1,000 liters already presents great difficulties in terms of timing and cost: theconcentration step alone may take a whole day to perform prior to running the test, and the whole procedure can cost $1,000 USD per sample. Due to these constraints, it is not possible to measure pathogens in treated water with sufficient sensitivity and speed.

Figure 2: A key challenge of monitoring pathogens in treated water is the impossibly large volumes of water that would need to be processed.

The need for crediting frameworks

Because it’s not possible to measure pathogens down to the very low levels needed for drinking water, a new framework was developed for public health protection. This risk-based approach determines what levels of treatment are required to reduce the concentrations of pathogens in a source water (including wastewater) down to acceptable levels for drinking. These frameworks often specify log10 reduction value (LRV) requirements for a range of reference pathogens. Well-known LRV requirements for potable reuse include the 12/10/10 requirements for virus/Giardia/Cryptosporidium in California and the 9.5/8/8.1 requirements for virus/Cryptosporidium/ Campylobacter in Australia. Regulations often require that multiple barriers be used to meet the pathogen LRV requirements. To ensure these massive reductions are achieved, pathogen LRV crediting frameworks are being established to assign credits to each treatment process in a potable reuse facility.

2) We need to revise existing crediting frameworks and create new ones

Around the globe, potable reuse regulations and guidance documents include high pathogen LRV requirements. Meeting these requirements is achieved by crediting individual treatment processes with LRVs for the reference pathogens (typically, viruses, Cryptosporidium, and Campylobacter). LRVs are additive, so the pathogen LRV credits for treatment processes in series can be summed together to get the total LRV credit for each pathogen (Fig. 3).

Figure 3: Potable reuse facilities require higher pathogen reduction because wastewater has higher concentrations of pathogens than surface water. Pathogen reduction is achieved by adding together the log10 reduction values (LRVs) of the multiple treatment processes.

Some countries (e.g., Australia, Switzerland, the U.S.) have existing pathogen LRV crediting frameworks for processes like chlorination, ozonation, membrane filtration, and ultraviolet (UV) disinfection. In the U.S., these frameworks were developed to show compliance with surface water treatment regulations, which typically require 2-, 3-, and 4-log10 reduction of Cryptosporidium, Giardia, and viruses, respectively. Because of this, the frameworks were generally capped at 2 to 4 LRV credits, which were the maximum levels needed for compliance. One shortcoming of existing frameworks, therefore, is that they may underestimate the LRVs that the treatment processes are actually capable of achieving.

Existing LRV crediting frameworks for surface waters have provided a starting point, but they need to be modified and expanded to consider the much greater pathogen control required for potable reuse (Fig. 4), as well as differences in feedwater characteristics compared to conventional supplies (e.g., different levels of turbidity, nutrients, organics, etc.). In addition, new crediting frameworks need to be created for treatment processes that are currently uncredited. Revising current and creating new pathogen LRV crediting frameworks will also improve the economics and efficiency of potable reuse. By maximizing the value of existing processes, resources are not wasted on otherwise unnecessary processes, reducing both the cost and complexity of treatment.

Figure 4: The pathogen log10 reduction value (LRV) requirements for potable reuse can be so high that even robust treatment trains cannot meet them under existing LRV crediting frameworks. The example shows a direct potable reuse treatment train that fails to meet the 20/14/15 LRV requirements for virus/Giardia/Cryptosporidium (V/G/C) in California and highlights the need for new/modified crediting frameworks.

3) Template for future pathogen crediting frameworks

General Approach

Existing pathogen LRV crediting frameworks were developed using the following general approach: 1) first, the processes were studied with the goal of understanding the mechanisms contributing to pathogen reduction and the factors (environmental, design, and operational) influencing those mechanisms; 2) then, surrogates for pathogen reduction that can be continuously measured were identified; and 3) lastly, an operational monitoring strategy and critical control limits were established. This same approach should be used for development of new crediting frameworks or modification of existing crediting frameworks for potable reuse applications.

Because of the limitations described above related to the detection of pathogens in the laboratory and the inability to monitor pathogens in real-time, crediting frameworks do not rely on direct measurements of pathogens. Instead, they track pathogen reduction indirectly using surrogates that can be measured continuously with online meters. Surrogates should be directly and conservatively tied to changes in pathogen reduction efficiency, ideally with enough sensitivity to demonstrate a range of LRV credits.

Identifying good surrogates for process monitoring can be challenging, especially if there are gaps in the scientific knowledge about the mechanisms of pathogen reduction in those processes – or about the design, operational, or environmental factors that influence pathogen reduction. Table 1 provides a summary of existing crediting frameworks for chlorination, membrane filtration, ozonation, and ultraviolet (UV) treatment in the context of the three- step process identified above. The subsections below go into detail on two examples: chlorination and membrane filtration.

Indicators, surrogates, & pathogens, oh my!

There are hundreds of different types of pathogens in wastewater that are removed with different efficacies in treatment processes. It is impractical to monitor all of them. Reference pathogens are considered the most conservative groups due to their high concentrations in wastewater, high pathogenicity, and high persistence in treatment and disinfection processes. Surrogates are parameters that can be quantified continuously to monitor the efficacy of pathogen reduction by a treatment process. Indicators are microorganisms or compounds used to estimate pathogen reduction in a treatment process but cannot necessarily be continuously monitored.

|

Process |

Mechanism(s) of Pathogen Reduction |

Factor(s) Influencing Pathogen Reduction |

Surrogate(s) |

Limitation(s) for Crediting in Potable Reuse |

Chlorination |

|

|

|

|

|---|---|---|---|---|

|

Membrane filtration |

|

|

|

|

|

Ozone |

|

|

|

|

|

UV |

|

|

|

|

Example: Chlorination

Mechanisms and influencing factors. The mechanism of pathogen reduction by chlorination is related to the reaction of chlorine with structural components of the pathogen, such as its cell membrane, genome, and proteins, causing irreversible damage and killing the pathogen (rendering it non-viable). The main factor influencing the extentof pathogen reduction is the chlorine dose—the product of the residual chlorine concentration (C) and the contact time (T) (i.e., the CT value). Pathogen destruction occurs with varying efficacy for different forms of chlorine (e.g., free chlorine, chlorine dioxide, chloramines). Water temperature, pH, and turbidity have been identified as important influencing factors in both drinking water and water reuse frameworks.

Surrogates. The surrogate typically used to assign pathogen LRV credits in chlorination systems is the CT value. CT is calculated in real-time by measuring the residual chlorine concentration (C) and multiplying it by the contact time (T). Contact times for a given reactor are often estimated based on theflow rate and volume of the basin and adjusted using baffling factors or a tracer study.

Monitoring & control. Residual chlorine is monitored at the outlet of the contact reactor or pipeline where contact time is achieved. The flow rate ismeasured in real-time and used to calculate the contact time. LRV credits are obtained by calculating the CT value. Temperature and pH are also monitored in real-time in the contact chamber. The U.S. EPA published CT “look-up” tables that allow users to identify the CT value required to achieve LRV credits for viruses and Giardia at the design water temperature. For free chlorine, the CT values are also dependent on pH.

Adaptations for potable reuse. The CT tables developed by the U.S. EPA for drinking water were based on studies of hepatitis A virus and Giardia, and on an assumption that the pathogen die-off kinetics were log-linear. The CT tables do not extend beyond 3 LRV credits for Giardia cysts or 4 LRV credits for viruses. CT look-up tables are not provided for Cryptosporidium oocysts, which are resistant to chlorine. While CT look-up tables haveprovided a starting point for pathogen LRV crediting in potable reuse systems, more studies are needed to determine how to adapt this crediting approach for potable reuse systems, where the turbidity and chlorine demand may be different than in conventional drinking water treatmentplants. In 2017, Australia developed a validation protocol for chlorine disinfection in recycled water (part of their WaterVal™ program) using coxsackievirus B5, which is one of the most resistant viruses to chlorination. One limitation of the CT tables is that they are still limited to 4 LRV credits for viruses. However, recent studies have indicated that coxsackievirus B5 can experience up to a 6-log10 reduction by free chlorine disinfection attypical design CT values.

Example: Microfiltration and ultrafiltration

Mechanisms and influencing factors. The primary mechanisms that contribute to pathogen reduction in microfiltration (MF) and ultrafiltration (UF) systems are (1) size exclusion (straining) by the membrane pores or by the accumulated cake layer, (2) adsorption to the membrane itself, and (3) entrapment within extracellular polymeric substances present in biological fouling layers. The efficacy of pathogen reduction depends on the relative difference between the diameter of the pathogen and the size of the pores. As such, MF membranes, which have pore sizes ranging from 0.1 to 10 µm, may be less effective at removing pathogens by size exclusion compared to UF membranes, which have pore sizes ranging from 10 nm to 0.1 µm (Fig. 1). Membrane integrity has been identified as the most important influencing factor, as breaches in the membrane can let pathogens pass through more readily.

Surrogates. Direct integrity testing and indirect integrity monitoring are the two surrogates used for pathogen LRV crediting in MF and UF systems, as outlined in the U.S.EPA’s Membrane Filtration Guidance Manual. Direct integrity tests (either pressure- based or marker-based) are performed daily, while indirect integrity monitoring (e.g., filtrate turbidity monitoring or particle counting) is done continuously. A direct integrity test must be capable of detecting a membrane breach that is 3 µm or less in size, while indirect integrity monitoring uses filtrate water quality to determine if a breach has occurred. MF and UF processes typically get 4 LRV credits for Giardia and Cryptosporidium but no credits for viruses.

Monitoring & control. Facilities must monitor and report the results of direct integrity tests and indirect integrity monitoring. If the membrane passes both integrity checks, then the full LRV credit can be claimed. If indirect integrity monitoring indicates a breach (e.g., filtrate turbiditygreater than 0.15 NTU in drinking water), a direct integrity test is triggered to determine the LRV credit.

Adaptations for potable reuse. The main adaptation being sought for pathogen LRV crediting in MF/ UF systems for potable reuse applications is to demonstrate a way to obtainvirus credits, which are currently not granted despite research showing measurable log10 reductions.

4) Moving forward

In addition to increasing LRV credits for under- credited treatment processes, new LRV crediting frameworks are needed for uncredited treatment processes.Expanding pathogen crediting frameworks will improve the economics of potable reuse by preventing the addition of unit processes that are not otherwise needed. However, crediting of treatment processes typically requires significant resources to conduct validation studies and gain approval from regulatory bodies.

Regional, national, or even international collaborations could help standardize and reduce the overall cost and effort of developing pathogen crediting frameworks for potable reuse applications. These collaborations require expertise in engineering design, operations, and input from the scientific community. Australia’s WaterVal framework is one example of a national effort to validate pathogen reduction in water treatment processes. This effort involved collaboration between researchers, utilities, regulators, and the private sector. Efforts to develop similar frameworks are underway (e.g., the Global Water Pathogen Project, CalVal in California, WRF 4997 for membrane bioreactors).

Issues 2 and 3 of this series will illustrate how frameworks for pathogen reduction crediting are evolving for treatment processes that are currently uncredited or under-credited, allowing the reuse industry to maximize the benefits of water reuse investments.

Sign up for our email list to receive the Potable Water Reuse Report

The contents of this report are not to be used for advertising, publication, or promotional purposes. Citation of trade names does not constitute an official endorsement or approval of the use of such commercial products by the US Government.

All product names and trademarks cited are the property of their respective owners, the findings of this report are the opinions of the authors only and are not to be constructed as the positions of the US Army Corps of Engineers or the US Government unless so designated by other authorized US Government Documents.